Study of Mish activation function in transfer learning with code and discussion

paper, official code, fastai discussion thread, my notebook

Mish activation function is proposed in Mish: A Self Regularized Non-Monotonic Neural Activation Function paper. The experiments conducted in the paper shows it achieves better accuracy than ReLU. Also, many experiments have been conducted by the fastai community and they were also able to achieve better results than ReLU.

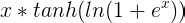

Mish is defined as x * tanh(softplus(x)) or by the below equation.

PyTorch implementation of Mish activation function is given below.

To build upon this activation function let’s first see the plot of the function.

Important properties of Mish:

- Unbounded Above:- Being unbounded above is a desired property of an activation function as it avoids saturation which causes training to slow down to near-zero gradients.

- Bounded Below:- Being bounded below is desired because it results in strong regularization effects.

- Non-monotonic:- This is the important factor in mish. We preserve small negative gradients and this allows the network to learn better and it also improves the gradient flow in the negative region as, unlike ReLU where all negative gradients become zero.

- Continuity:- Mish’s first derivative is continuous over the entire domain which helps in effective optimization and generalization. Unlike ReLU which is discontinuous at zero.

To compute the first derivative expand the tanh(softplus(x)) term and you will get the following term and then do product rule of the derivative.

When using Mish against ReLU use a lower learning rate in the case of Mish. Range of around 1e-5 to 1e-1 showed good results.

Testing Mish against ReLU

Rather than training from scratch which is already done in the paper, I would test for transfer learning. When we use pretrained models for our own dataset we keep the CNN filter weights the same (we update them during finetuning) but we initialize the last fully-connected layers randomly (head of the model). So I would test for using ReLU and Mish in these fully-connected layers.

I would be using OneCycle training. In case you are unfamiliar with the training technique that I would use here, I have written a complete notebook summarizing them in fastai. You can check the notebook here.

I use CIFAR10 and CIFAR100 dataset to test a pretrained Resnet50 model. I would run the model for 10 epochs and then compare the results at the fifth and tenth epoch. Also, the results would be averaged across 3 runs using different learning rates (1e-2, 5e-3, 1e-3). The weighs of the CNN filters would not be updated, only the fully connected layers would be updated/trained.

For the fully connected layers, I would use the following architecture. In case of Mish, replace the ReLU with Mish.

The final results are shown below. It was observed that Mish required training with a smaller learning rate otherwise it overfits quickly, thus suggesting that it requires stronger regularization than ReLU. It was consistent across multiple runs. Generally, you can get away with using a higher learning rate in the case of ReLU but when using Mish a higher learning rate always lead to overfitting.

Although the results are quite similar but by using Mish we can see some marginal improvements. This is a very limited test as only one Mish activation is used in the entire network and also the network has been run for only 10 epochs.

Visualization of Output landscape

We would use a 5 layer randomly initialized fully connected neural network to visualize the output landscape of ReLU and Mish. The code and the results are given below.

From the above output landscapes, we can observe that the mish produces a smoother output landscape thus resulting is smoother loss functions which are easier to optimize and thus the network generalizes better.

I am a deep learning researcher with an interest in computer vision and natural language processing. Check out my other posts and my other work at https://kushajveersingh.github.io/.